Did you know that you have complete control over who crawls and indexes your site, down to individual pages?

The way this is done is through a file called Robots.txt.

Robots.txt is a simple text file that sites in the root directory of your site. It tells “robots” (such as search engine spiders) which pages to crawl on your site, which pages to ignore.

While not essential, the Robots.txt file gives you a lot of control over how Google and other search engines see your site.

When used right, this can improve crawling and even impact SEO.

But how exactly do you create an effective Robots.txt file? Once created, how do you use it? And what mistakes should you avoid while using it?

In this post, I’ll share everything you need to know about the Robots.txt file and how to use it on your blog.

Explanation for the above code

This code is divided into three sections. Let’s first study each of them after that we will learn how to add custom robots.txt file in blogspot blogs.

User-agent: Mediapartners-Google

This code is for Google Adsense robots which help them to serve better ads on your blog. Either you are using Google Adsense on your blog or not simply leave it as it is.

User-agent: *

This is for all robots marked with asterisk (*). In default settings our blog’s labels links are restricted to indexed by search crawlers that means the web crawlers will not index our labels page links because of below code.

Disallow: /search

That means the links having keyword search just after the domain name will be ignored. See below example which is a link of label page named SEO.

http://www.yourblogurl.com/search/label/SEO

And if we remove Disallow: /search from the above code then crawlers will access our entire blog to index and crawl all of its content and web pages.

Here Allow: / refers to the Homepage that means web crawlers can crawl and index our blog’s homepage.

Disallow Particular Post

Now suppose if we want to exclude a particular post from indexing then we can add below lines in the code.

Disallow: /yyyy/mm/post-url.html

Here yyyy and mm refers to the publishing year and month of the post respectively. For example if we have published a post in year 2020 in month of July then we have to use below format.

Disallow: /2020/07/post-url.html

To make this task easy, you can simply copy the post URL and remove the blog name from the beginning.

Disallow Particular Page

If we need to disallow a particular page then we can use the same method as above. Simply copy the page URL and remove blog address from it which will something look like this:

Disallow: /p/page-url.html

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/feeds/posts/default?orderby=UPDATED

Note: This above sitemap will only tell the web crawlers about the recent 25 posts. If you want to increase the number of link in your sitemap then replace default sitemap with below one. It will work for first 500 recent posts.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

If you have more than 500 published posts in your blog then you can use two sitemaps like below:

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000

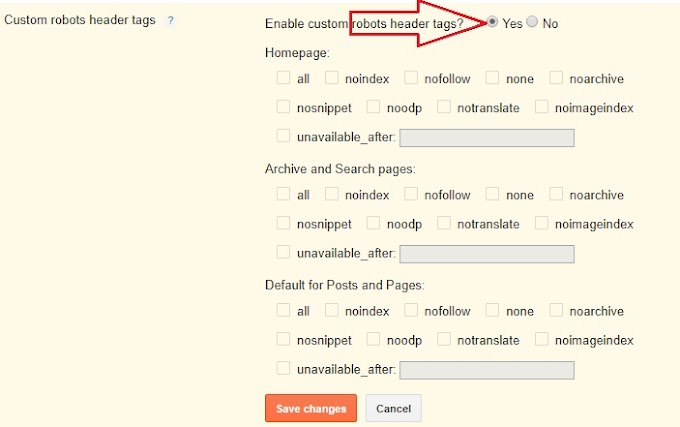

2. Open Settings >> Search Preferences >> Crawlers and indexing >> Custom robots.txt >> Edit >> Yes

3. Here you can make changes in the robots.txt file

4. After making changes, click Save Changes button

http://www.yourblogurl.blogspot.com/robots.txt

Once you visit the robots.txt file URL you will see the entire code which you are using in your custom robots.txt file.

If search engine bots can spend their crawl budgets wisely, they’ll organize and display your content in the SERPs in the best way, which means you’ll be more visible.

Make sure not to put any code in your custom robots.txt settings without knowing about it. Simply ask me to resolve your queries. I’ll tell you everything in detail.

I hope you’ll get the benefit of this post and get better search engine presence and ranking.

This is it! Should you have any questions regarding custom robots.txt file for Blogger/Blogspot, do let me know in the comments section. I will try my best to assist you.

The way this is done is through a file called Robots.txt.

Robots.txt is a simple text file that sites in the root directory of your site. It tells “robots” (such as search engine spiders) which pages to crawl on your site, which pages to ignore.

While not essential, the Robots.txt file gives you a lot of control over how Google and other search engines see your site.

When used right, this can improve crawling and even impact SEO.

But how exactly do you create an effective Robots.txt file? Once created, how do you use it? And what mistakes should you avoid while using it?

In this post, I’ll share everything you need to know about the Robots.txt file and how to use it on your blog.

What is Robots.txt?

Robots.txt is a text file on the server in that you can write which directories, web pages, or links should not be included for search results. It means you can restrict search engine bots to crawl some directories and web pages or links of your website or blog. Now custom robots.txt is available for Blogger. In Blogger search option is related to Labels. If you are not using labels wisely per post, you should disallow the crawl of the search link. In Blogger, by default, the search link is disallowed to crawl. In this robots.txt, you can also write the location of your sitemap file. A sitemap is a file located on a server that contains all posts’ permalinks of your website or blog. Mostly sitemap is found in XML format, i.e., sitemap.xml.

Why Robots.txt File is Important?

Well, the success of any professional blogs usually depends on how Google search engine ranks your blog. We store a number of posts/pages/files/directories in our website structure. Often we don’t want Google to index all these components. For example, you may have a file for internal use — and it is of no use for the search engines. You don’t want this file to appear in search results. Therefore, it is prudent to hide such files from search engines.

Robots.txt file contains directives which all the top search engines honor. Using these directives you can give instructions to web spiders to ignore certain portions of your website/blog.

Always remember that search crawlers scan the robots.txt file before crawling any web page.

Check out:

• Create Blogger Sitemap and Add to Google Webmaster Tools

• Custom Robots Header Tags Settings For Blogger (Blogspot)

• How To Create HTML Sitemap Page In Blogger

Check out:

• Create Blogger Sitemap and Add to Google Webmaster Tools

• Custom Robots Header Tags Settings For Blogger (Blogspot)

• How To Create HTML Sitemap Page In Blogger

Custom Robots.txt for Blogger/Blogspot

Blogger/Blogspot is a free blogging service, robots.txt of your blog was not directly in your control. But now Blogger has made it possible to make changes and create a Custom Robots.txt for each blog. Robots.txt for a Blogger/Blogspot blog looks typically like this:

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/feeds/posts/default?orderby=UPDATED

Explanation for the above code

This code is divided into three sections. Let’s first study each of them after that we will learn how to add custom robots.txt file in blogspot blogs.

User-agent: Mediapartners-Google

This code is for Google Adsense robots which help them to serve better ads on your blog. Either you are using Google Adsense on your blog or not simply leave it as it is.

User-agent: *

This is for all robots marked with asterisk (*). In default settings our blog’s labels links are restricted to indexed by search crawlers that means the web crawlers will not index our labels page links because of below code.

Disallow: /search

That means the links having keyword search just after the domain name will be ignored. See below example which is a link of label page named SEO.

http://www.yourblogurl.com/search/label/SEO

And if we remove Disallow: /search from the above code then crawlers will access our entire blog to index and crawl all of its content and web pages.

Here Allow: / refers to the Homepage that means web crawlers can crawl and index our blog’s homepage.

Disallow Particular Post

Now suppose if we want to exclude a particular post from indexing then we can add below lines in the code.

Disallow: /yyyy/mm/post-url.html

Here yyyy and mm refers to the publishing year and month of the post respectively. For example if we have published a post in year 2020 in month of July then we have to use below format.

Disallow: /2020/07/post-url.html

To make this task easy, you can simply copy the post URL and remove the blog name from the beginning.

Disallow Particular Page

If we need to disallow a particular page then we can use the same method as above. Simply copy the page URL and remove blog address from it which will something look like this:

Disallow: /p/page-url.html

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/feeds/posts/default?orderby=UPDATED

Note: This above sitemap will only tell the web crawlers about the recent 25 posts. If you want to increase the number of link in your sitemap then replace default sitemap with below one. It will work for first 500 recent posts.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

If you have more than 500 published posts in your blog then you can use two sitemaps like below:

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000

Add Custom Robots.txt File on Blogger/Blogspot

1. Go to your blogger dashboard2. Open Settings >> Search Preferences >> Crawlers and indexing >> Custom robots.txt >> Edit >> Yes

3. Here you can make changes in the robots.txt file

4. After making changes, click Save Changes button

How to Check Your Robots.txt File?

You can check this file on your blog by adding /robots.txt at the end of your blog URL in the web browser. For example:http://www.yourblogurl.blogspot.com/robots.txt

Once you visit the robots.txt file URL you will see the entire code which you are using in your custom robots.txt file.

Conclusion

By setting up your robots.txt file the right way, you’re not just enhancing your own SEO. You’re also helping out your visitors.If search engine bots can spend their crawl budgets wisely, they’ll organize and display your content in the SERPs in the best way, which means you’ll be more visible.

Make sure not to put any code in your custom robots.txt settings without knowing about it. Simply ask me to resolve your queries. I’ll tell you everything in detail.

I hope you’ll get the benefit of this post and get better search engine presence and ranking.

This is it! Should you have any questions regarding custom robots.txt file for Blogger/Blogspot, do let me know in the comments section. I will try my best to assist you.

0 Comments